Allegro Computers¶

Desktop Machines¶

The main computers at our disposal are:

| name | processor | speed | memory | alias | RH |

|---|---|---|---|---|---|

| tulor | 40x Intel Xeon E5-2640 v4 | 2.40GHz | 515.7 GiB | TU | 7 |

| helada | 32x Intel Xeon E5-2640 v3 | 2.60GHz | 516.8 GiB | HE | 7 |

| chaxa | 32x Intel Xeon E5 2665 | 2.40GHz | 258.3 GiB | CX | 7 |

| cejar | 16x Intel Xeon E5 2650 | 2.00GHz | 32.1 GiB | CE | 7 |

| tebinquiche | 12x Intel Xeon E5645 | 2.40GHz | 47.3 GiB | TQ | 6 |

| salada | 20x Intel Xeon E5-2630 v4 | 2.20GHz | 31.9 GiB | SL | 7 |

| escondida | 16x Intel Xeon E5-2630 v3 | 2.40GHz | 31.2 GiB | ES | 7 |

All these machines except tebinquiche are running Red Hat Enterprise Linux Server release 7.5 (Maipo). tebinquiche still runs 6.10 (Santiago) and is intended for legacy purposes.

SL and ES are primarily intended as servers for the lustre filesystem, so it is probably not a good idea to run intensive jobs on them.

Hard Disks¶

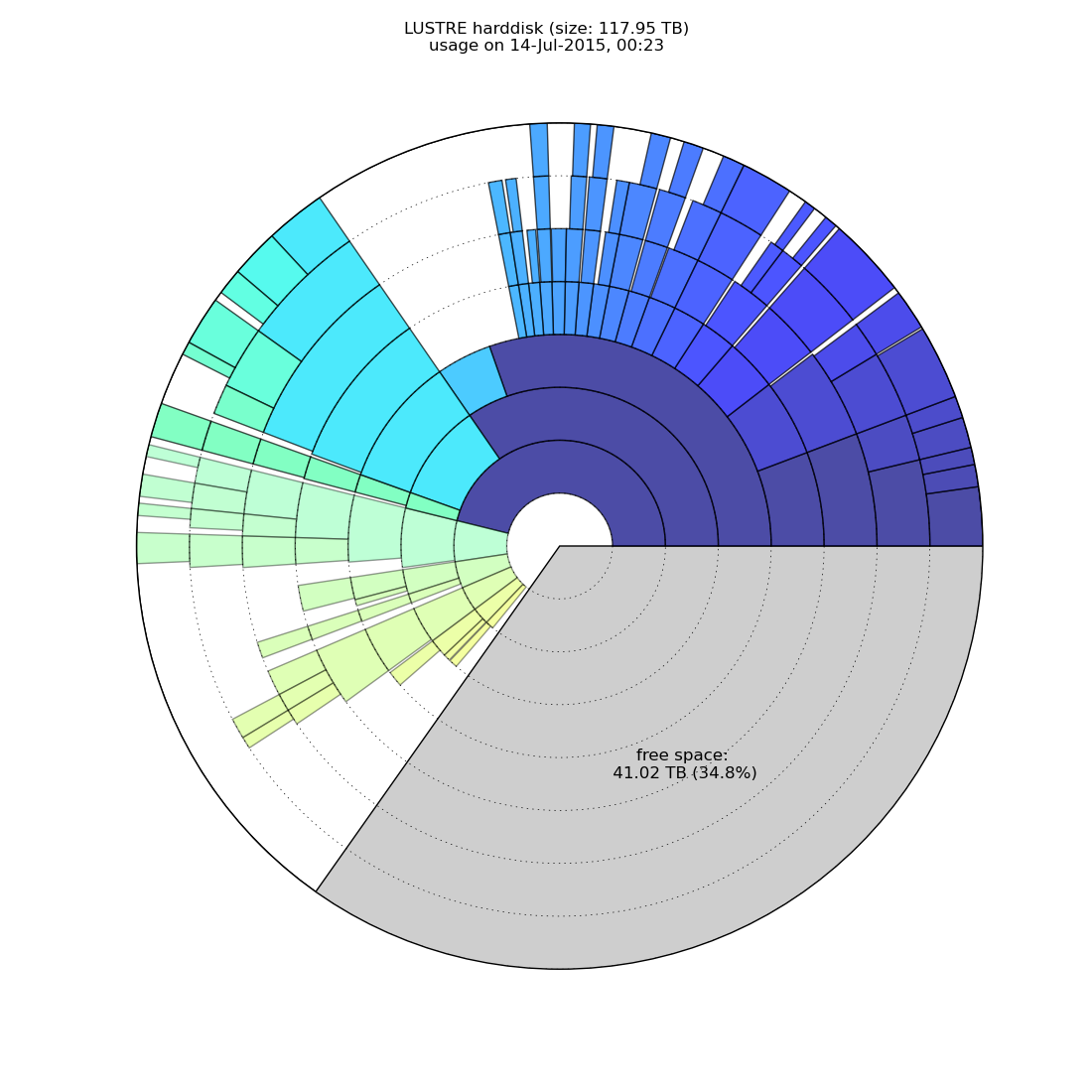

Figure 9: Baobab directory tree with some info about the directory name, size, fraction of disk space, and allegro ID (if it is an Allegro project) in the status bar.

Our data is stored on several filesystems - three at last count. Two of these (/lustre1 and /lustre2) are Lustre systems, but the third (/allegro1) is mounted via the more standard NFS. Whether Lustre or NFS should be transparent to the user. Currently, these three are mounted on all 5 Allegro compute nodes under the names given above, but /lustre1 and /lustre2 at least can be accessed from all STRW computers via /net/chaxa/lustre1 or 2 (at reduced speed). All Allegro data is currently stored in /lustre2/allegro. Any Sterrewacht user can store data on any of the Allegro file systems, although they are encouraged to work on ALMA projects only as set up by the wizard. Non-ALMA data should not be stored or processed on Allegro machines without prior arrangement.

Warning

Although your files on the Sterrewacht home area are backed up on a daily basis, there is no backup scheme in place for your files on the Allegro data disks. You are therefore advised NOT to store irreplaceable files on these disk systems.

Such things as Allegro-provided software and the data archive are replicated on all file systems, but actual data is not.

The disk occupation can be viewed with the tool

$ baobab_lustre.py -r <file system>

which is similar to the GTK tool baobab (see also Figure on the right). This in-house developed tool reads the output of the file space tool du. Since the query of the disk space usage with du can be quite lengthy, the computer group installed a daily cron job, which logs its result into $ALLEGRO_LOG/du_lustre/du.log.

The Lustre architecture consists of a Management Data Server (MDS) and Object Storage Servers (OSS). The MDS will take over the management of the data, the OSS are responsible for storing the actual data. Currently, SL acts as MDS.

To see how the data is distributed over the MDS and OSSs, simply type:

$ lfs df -h

UUID bytes Used Available Use% Mounted on

lustre2-MDT0000_UUID 688.9G 4.8G 637.5G 1% /lustre2[MDT:0]

lustre2-OST0001_UUID 93.8T 76.3T 12.8T 86% /lustre2[OST:1]

lustre2-OST0002_UUID 94.7T 76.8T 13.2T 85% /lustre2[OST:2]

filesystem_summary: 188.5T 153.1T 25.9T 86% /lustre2

UUID bytes Used Available Use% Mounted on

lustre1-MDT0000_UUID 688.9G 1.7G 640.7G 0% /lustre1[MDT:0]

lustre1-OST0001_UUID 74.9T 26.4T 44.7T 37% /lustre1[OST:1]

lustre1-OST0003_UUID 21.5T 10.9T 9.5T 53% /lustre1[OST:3]

lustre1-OST0004_UUID 113.6T 11.5T 96.4T 11% /lustre1[OST:4]

filesystem_summary: 210.0T 48.8T 150.6T 24% /lustre1

Note

It should be noted that UNIX tools like du or df report sizes of 1KiB-byte blocks, i.e., 1024 bytes! To check the difference between decimal and binary prefix, check Wikipedia. All sizes in baobab are quoted in decimal notation, i.e. TB rather than TiB.

Directory Structure¶

All relevant files for allegro are found in the root directory <FS>/allegro, where FS is currently one of /lustre1, /lustre2 or /allegro1. Under the root directory is contained the following subdirectories:

- allegro_staff: Restricted for normal users. Only accessible for Allegro members

- bin: Binaries/executables for allegro

- doc: Documentation

- etc: Some useful stuff.

- projects: Allegro projects

- src: Source files (e.g., python modules, ...)

- .db: Location of all the project database files. Should NOT be edited by hand.

bin: Binaries/executables for everyone

- data: ALMA data

- projects: Data from the projects

- public_data_archive: Public data that is accessible for everyone

doc: Documentation (e.g., ALMA handbooks, ...)

etc: Startup scripts, etc.

home: Home directories for users (mostly intended to house links to the project data directories).

lib: libraries (e.g., python modules, ...)